LiDAR Navigation Explained: From Basic Principles to Advanced Applications

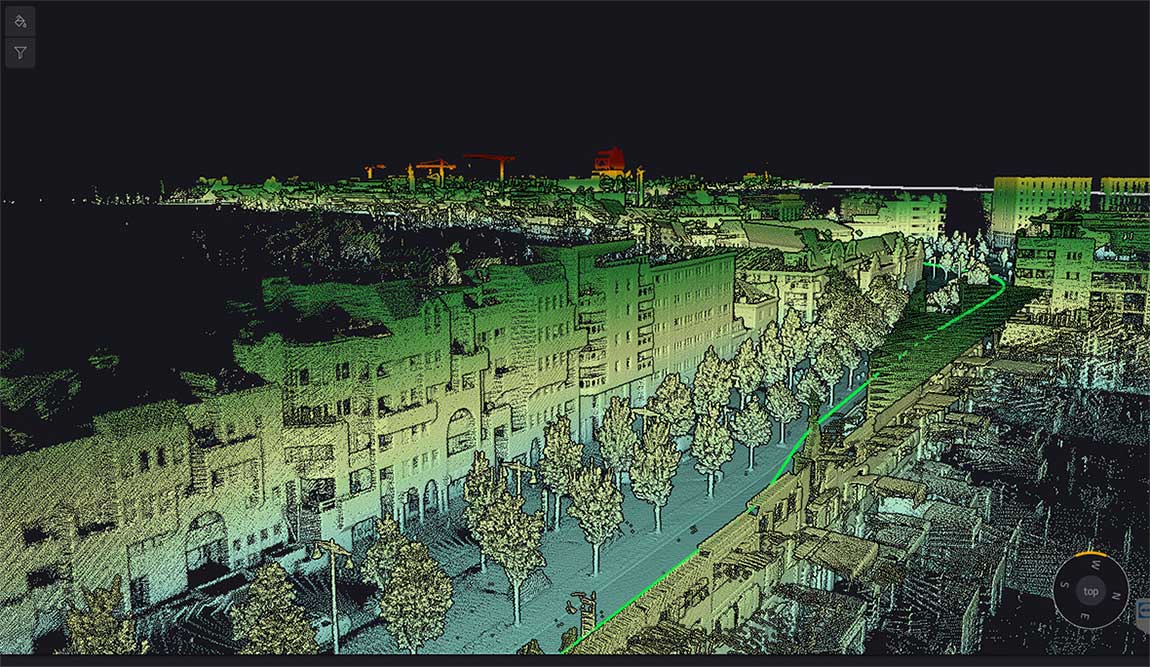

LiDAR (Light Detection and Ranging) serves as the life-blood of modern navigation systems. It provides precise spatial measurements through an elegant yet powerful operational principle. Unlike passive sensing technologies, LiDAR actively emits laser pulses toward objects and measures the reflection time back to the sensor. This time-of-flight measurement helps calculate exact distances using the formula d = (c × Δt) / 2, where c represents light speed and Δt indicates the time delay.

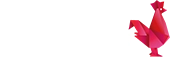

LiDAR’s power in navigation comes from knowing how to capture environmental data faster. Advanced systems can millions of samples per second. Such high-frequency sampling creates dense point clouds that represent three-dimensional spaces with remarkable detail.

A LiDAR system’s heart consists of several critical components that work together. The laser emitter generates short, focused pulses that travel toward target objects while the photodetector captures the backscattered light. The system uses precise timing mechanisms to measure tiny delays between emission and detection. Navigation applications need this sensory data anchored in physical space through integration with positioning systems.

Yes, it is essential to fine-tune the coordination between the laser scanning unit and navigation hardware for LiDAR to work. Global Navigation Satellite Systems (GNSS) provide geographical coordinates (latitude, longitude, height). An Inertial Measurement Unit (IMU) determines the sensor’s orientation through pitch, roll, and yaw measurements. This technology fusion transforms raw distance measurements into georeferenced three-dimensional coordinates.

LiDAR navigation brings unique benefits. It works independently from ambient lighting conditions. The technology delivers centimeter-level precision, with accuracy ranging between 15 and 25 cm, making it perfect for detailed environmental mapping applications.

LiDAR navigation has its limits. The technology’s signals get absorbed by water, asphalt, and tar surfaces. Fog and heavy cloud cover can reduce performance by dispersing laser pulses before reaching their targets. Notwithstanding that, LiDAR remains crucial in navigation domains, from autonomous vehicles to drone mapping and indoor robot guidance

Basic principle of distance measurement using reflected waves

How LiDAR Systems Capture and Process Environmental Data

Modern LiDAR systems use advanced mechanisms that turn raw environmental data into useful navigation information. These systems work through exact measurement principles and specialized processing techniques to convert reflected light into precise spatial representations.

Time-of-Flight Measurement Principles in Modern LiDAR

LiDAR technology’s core operational principle centers on time-of-flight (ToF) measurement. This approach calculates distances using the formula d = (c × Δt)/2, where c represents light speed and Δt shows the time delay between emission and reception. Laser pulses bounce back to the sensor after hitting objects, and the sensor measures this time interval precisely.

Current systems use two main ToF methods. Direct Time-of-Flight (dToF) measures the actual travel time of laser pulses and converts this duration into distance measurements. This method delivers reliable, low-power distance measurements and remains accessible to more people because of its data reliability and budget-friendly operation. Indirect Time-of-Flight (iToF) uses amplitude-modulated laser sources and measures the phase difference between transmitted and reflected light. The system then converts this phase change to time and distance.

Pulse vs. Continuous Wave LiDAR: Technical Differences

Pulse and continuous wave systems represent two distinct approaches to LiDAR operation. Pulse LiDAR releases short, high-intensity bursts that last only nanoseconds or microseconds. These systems reach high peak power while keeping average power low. The concentrated energy cleans contaminants efficiently and works with better electrical efficiency because pulses emit intermittently.

Continuous wave (CW) LiDAR creates an uninterrupted beam of light. Frequency Modulated Continuous Wave (FMCW) systems use “chirped” light—laser beams that emit continuously with cyclically changing frequencies. FMCW systems have a key advantage: they measure object velocity directly through the Doppler effect. This eliminates the need for multiple measurements that ToF systems typically require.

3D Point Cloud Generation from Raw LiDAR Data

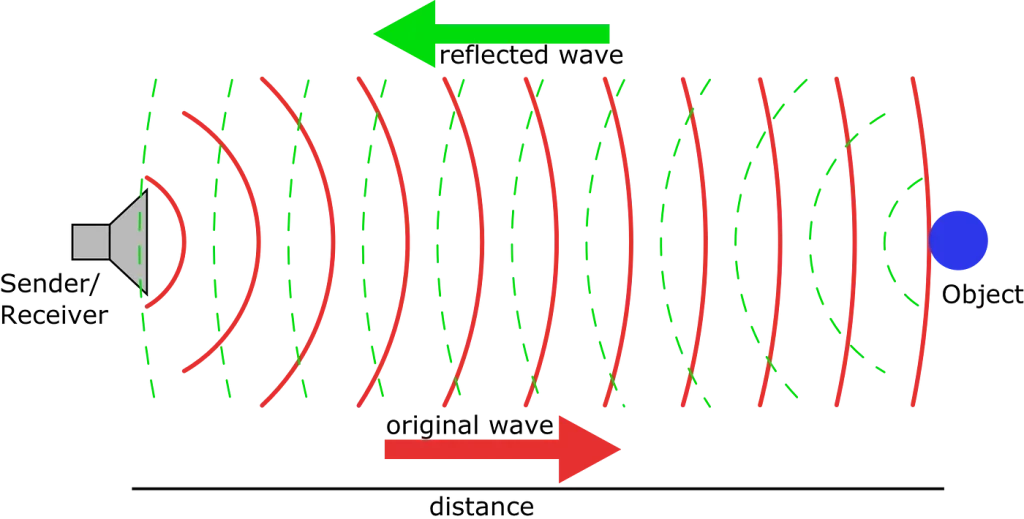

Raw LiDAR data consists of unfiltered, unstructured points shown as tabular data with coordinates and attributes like reflectance values. Processing turns this raw information into structured 3D point clouds that represent ground environments accurately.

The generation process follows several key steps. Point cloud filtering and cleaning removes incorrect points through methods like density-based filtering, which spots and removes points based on local density evaluation. Point classification labels individual points as ground, vegetation, buildings, or other elements. Surface modeling creates triangles and polygons between nearby points to generate Digital Elevation Models (DEMs) and Digital Surface Models (DSMs).

The data usually uses the industry-standard LAS file format (.las) or its compressed variant (.laz). These formats store and transfer millions of points from detailed LiDAR scans efficiently.

Industrial site scanned with LiDAR for structural mapping

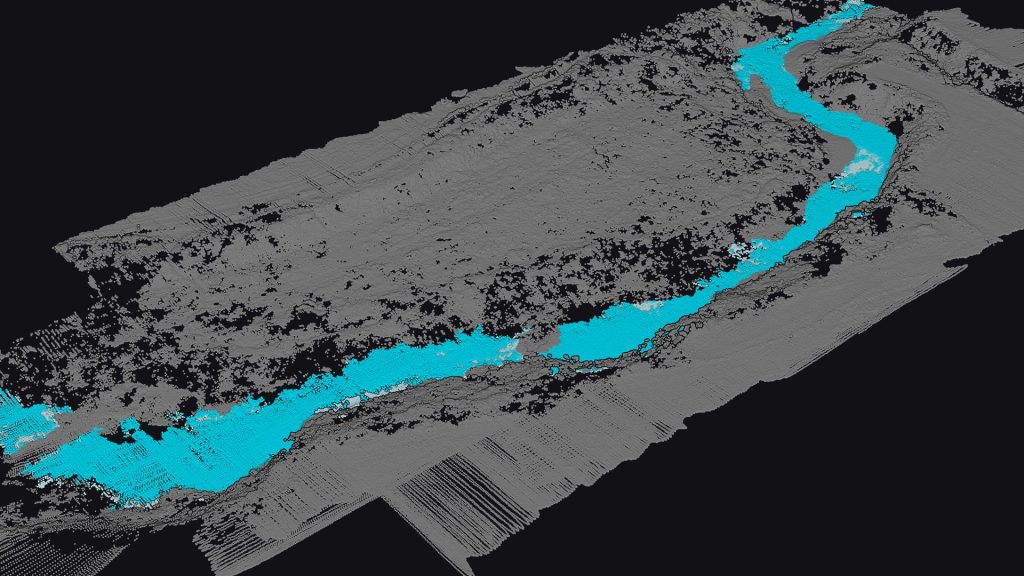

Riverbed mapping using LiDAR point cloud classification

Core Components of LiDAR Navigation Systems

LiDAR navigation systems need four essential hardware components that work together to sense and interpret the surrounding environment accurately. These components have specialized functions that help create precise spatial maps for robotic navigation and autonomous systems.

Laser Emitters and Receivers: Technical Specifications

The laser source sits at the core of every LiDAR system and generates energy pulses to map the environment. Most ground-based LiDAR systems use near-infrared wavelengths of 960-1550 nm, while water-based systems need visible 532 nm sources to penetrate water.

The receiver subsystems contain detectors that turn photons into electrical current. Today’s LiDAR navigation systems use three main detector technologies:

- Avalanche Photodiodes (APDs): These work in linear mode below breakdown voltage for standard detection

- Single-Photon Avalanche Diodes (SPADs): These work in Geiger mode above breakdown voltage and detect individual photons with exceptional sensitivity

- Silicon Photomultipliers (SiPM): These connect multiple SPADs in parallel to detect total photon count

The detector’s sensitivity relates to signal-to-noise ratio and maximum range capability. The system needs accurate timing electronics paired with sensitive photon detection to determine the distance to navigational obstacles.

Scanning Mechanisms: Mechanical vs. Solid-State LiDAR

Mechanical LiDAR systems use moving parts like rotating mirrors or entire assemblies to scan their surroundings. These moving parts allow wide, dynamic scanning ranges up to 360° horizontally and capture big areas in high detail. On the other hand, solid-state LiDARs guide laser beams through electronic methods alone, which removes moving parts to improve reliability.

The main solid-state technologies include:

- 1. MEMS (Micro-electromechanical systems): These use tiny mirrors that move to scan environments and are common in automotive applications

- 2. OPA (Optical Phased Arrays): These are true solid-state solutions that adjust light phase to direct beams without physical movement

Hybrid LiDAR systems combine both technologies. They use electronic steering for quick coverage while adding mechanical elements to extend range and improve resolution. Solid-state systems are more reliable and compact, but mechanical LiDARs provide better range and detail when you need detailed environmental mapping.

Signal Processing Hardware for Real-time Navigation

Signal processing architecture is crucial for LiDAR navigation. Modern systems combine a CPU, system-on-chip (SoC), and field-programmable gate array (FPGA) to handle time-of-flight calculations and create point clouds. These processing subsystems tackle key challenges like signal crosstalk, saturation, noise filtering, and sampling rate limits.

Navigation applications need immediate decision making. The processing architecture runs advanced algorithms that perform Gaussian curve fitting, waveform averaging, digital filtering, and deconvolution to extract meaningful environmental data. Autonomous vehicles need this processing to happen quickly to navigate safely at operational speeds.

Power and Thermal Management Considerations

Heat management is a major challenge in LiDAR navigation systems. Lasers run at about 10% efficiency, and 90% of input energy turns into heat instead of light. This heat load affects several system components:

- Laser diode performance: Too much heat causes wavelength shifts, lower output, and faster degradation

- Detector function: Heat noise reduces signal-to-noise ratio and accuracy

- Optical components: Temperature changes distort beams and cause misalignment

Good heat management uses both preventive and transfer methods. Prevention focuses on careful enclosure design and material choice, while transfer methods create conductive paths from heat sources to external surfaces. Solid-state LiDAR systems can reduce their maximum temperature rise by about 20% through optimized beam scanning with simple forced air cooling.

YellowScan Navigator LiDAR system for advanced geospatial mapping

SLAM Algorithms: The Brain Behind LiDAR Navigation

Software that interprets LiDAR data powers every autonomous robot as it moves through complex environments. SLAM algorithms act as the brain that helps machines understand their surroundings and know their exact position.

Simultaneous Localization and Mapping Fundamentals

Robotic navigation faces a classic chicken-or-egg problem: how can machines create maps of unknown spaces while figuring out where they are on that same map? This challenge is the foundation of autonomous navigation systems that we see in self-driving cars and warehouse robots.

SLAM has two main processes that work together. The prediction step estimates where the robot will be next based on its movement and current position. The correction step then fine-tunes this guess using LiDAR sensors that measure distances to nearby objects. These steps repeat continuously to improve position accuracy and build better maps.

Today’s SLAM systems work in one of two ways. Some use filtering methods like Extended Kalman Filters and particle filters to calculate probable robot positions and map features. Others take a graph-based approach, creating networks that show how landmarks relate to each other in space. Graph methods often work better in complex environments.

Feature Extraction from LiDAR Point Clouds

Raw LiDAR data needs processing before SLAM algorithms can use it. Feature extraction turns scattered point clouds into patterns and landmarks that robots can use as reference points.

LiDAR SLAM looks for geometric shapes in point clouds, unlike camera systems that analyze pixels. The key features it finds include:

- Edges and planes: Natural geometric shapes that stay stable between scans

- Corners and distinct surfaces: Unique markers that help align different views

- Ground segmentation: Telling flat ground apart from obstacles

Engineers use different methods to find these features. Simple approaches use math operations to spot patterns, while region growing combines similar points into objects. Advanced techniques like RANSAC help identify flat surfaces in point clouds.

Good feature detection makes SLAM work better. Engineers must find the right balance between distinct features and quick processing. This balance matters most when robots need split-second updates to move safely.

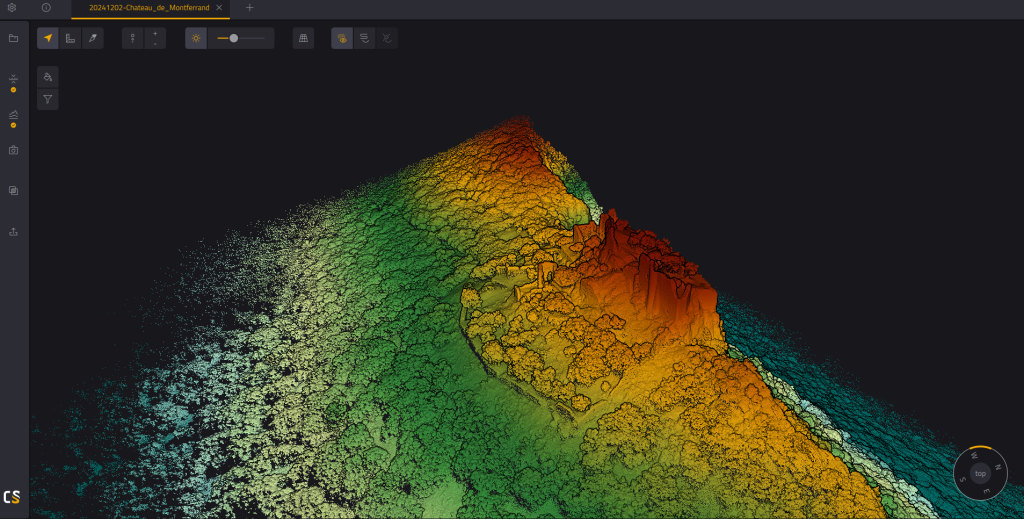

3D point cloud of Montferrand Castle displayed in CloudStation

Advanced Applications of LiDAR in Robotic Navigation

LiDAR systems have grown beyond theory to enable ground autonomous navigation in a variety of environments. These versatile sensing systems help machines see, interpret, and direct themselves through complex surroundings with remarkable precision – from busy urban streets to GPS-denied indoor spaces.

Autonomous Vehicle Perception Systems

LiDAR is the life-blood of modern autonomous vehicle (AV) perception that enables accurate environmental modeling even in tough conditions. Today’s automotive LiDAR systems can detect and classify multiple moving objects at once, including pedestrians, cyclists, and other vehicles. This classification helps make safe navigation decisions in complex traffic scenarios.

The system works great in low-light and nighttime environments because LiDAR doesn’t need ambient light to function. Its live object tracking makes vehicles more aware of their surroundings, especially in challenging conditions like poor light or bad weather.

Automotive LiDAR integration needs both physical placement and smart software algorithms. These algorithms process sensor data and extract useful information about object detection, classification, and tracking. Developers usually combine LiDAR with cameras, radar, and GPS to build complete perception systems that make up for individual sensor limitations.

Indoor Robot Navigation in GPS-denied Environments

LiDAR-based solutions excel at positioning mobile robots with impressive accuracy inside buildings where satellite navigation doesn’t work well. Modern systems can pinpoint absolute position within 2cm by using 3D maps as geometric references. This precision is invaluable in warehouses, factories, and other industrial settings that need exact navigation.

Indoor LiDAR navigation uses registration algorithms to line up current observations with 3D geometric maps. This alignment happens through feature matching algorithms and Iterative Closest Points (ICP) algorithms. These techniques reduce point-to-point distance between LiDAR measurements and reference maps, which enables accurate positioning without GPS signals.

Navigation inside buildings is challenging because systems must process large maps of substantial areas while dealing with moving people or shifted furniture. Good solutions need to process 3D data over 20 times per second to give frequent position updates.

Aerial Drone Mapping and Obstacle Avoidance

Drone-mounted LiDAR systems have reshaped terrain mapping by capturing detailed topographical data efficiently. Advanced systems can collect up to 1 million points per second, which creates high-resolution digital elevation models (DEMs) needed for environmental analysis and planning.

LiDAR serves two purposes in autonomous flight: environmental mapping and obstacle avoidance. The obstacle avoidance functionality uses three modules: environment perception, algorithm processing, and motion control. This combined system helps drones spot potential collisions and take evasive action on their own.

LiDAR-equipped drones work exceptionally well for specialized inspections like cellular tower assessment, accident scene documentation, and agricultural monitoring. These platforms can work safely in tough environments while collecting precise spatial data that would be dangerous for humans to gather.

Marine and Underwater LiDAR Navigation Applications

Bathymetric LiDAR systems are special adaptations made for underwater environments. These systems use frequencies that can penetrate water – usually green light at 532 nm wavelength instead of near-infrared used on land. This special light maps clear rivers and shallow coastal bathymetry from the air, which eliminates risky underwater surveying.

Underwater applications go beyond depth measurement to include detailed benthic habitat mapping for marine ecology studies. Advanced bathymetric systems can measure depths down to 25 meters with exceptional clarity.

Busy urban street (© Unsplash)

Marine ecology studies (© Unsplash)

Key Takeaways on LiDAR Navigation Technology

Key Takeaways and Future Views

LiDAR technology is the life-blood of modern autonomous navigation systems. It combines exact measurements with smart data processing. LiDAR helps machines create detailed maps of their surroundings and know their position through time-of-flight principles and advanced SLAM algorithms.

This versatile technology shines in many different areas. Self-driving cars use LiDAR to spot and classify objects instantly. Indoor robots guide themselves with amazing accuracy even without GPS. LiDAR proves its worth in specialized tasks like mapping underwater terrain.

New technical breakthroughs help solve old problems. Solid-state systems work more reliably than their mechanical counterparts. Better signal processing helps machines see through atmospheric interference. These improvements lead to stronger and more efficient navigation tools for every type of application.

LiDAR navigation’s future looks bright. New technologies like optical phased arrays and smarter SLAM algorithms redefine the limits of autonomous systems. Engineers and developers working with robots, self-driving cars, and environmental mapping need to understand these basic concepts.

LiDAR navigation evolves faster than ever as technical innovation drives real-life uses. Better hardware joins forces with smarter algorithms to create new possibilities for autonomous navigation on ground, underwater, and in the air.

Frequently Asked Questions

What is the basic principle behind LiDAR technology?

LiDAR technology operates on the principle of time-of-flight measurement. It emits laser pulses and measures the time taken for the light to reflect back from objects, allowing for precise distance calculations and environmental mapping.

How does LiDAR contribute to autonomous navigation?

LiDAR enables autonomous navigation by creating detailed 3D point clouds of the environment. These point clouds are processed using SLAM algorithms, allowing robots and vehicles to simultaneously map their surroundings and determine their position within that map.

What are the key components of a LiDAR navigation system?

A LiDAR navigation system consists of laser emitters and receivers, scanning mechanisms (mechanical or solid-state), signal processing hardware, and power management systems. These components work together to capture, process, and interpret environmental data for navigation purposes.

How does LiDAR perform in different environments?

LiDAR is versatile across various environments. It excels in autonomous vehicles for object detection, indoor navigation in GPS-denied spaces, aerial mapping with drones, and even underwater applications using specialized bathymetric LiDAR systems.

What are some limitations of LiDAR technology?

While highly effective, LiDAR has some limitations. It can experience signal absorption on certain surfaces like water and asphalt, and its performance may degrade in adverse weather conditions such as heavy fog or cloud cover. Additionally, the cost of high-quality LiDAR systems can be a constraint for some applications.